Integrating AI into Developer Workflow

There’s been some chatter recently1 around research conducted by METR, an AI-research non-profit, that found AI usage led to a 19% decrease in productivity in a test group of developers2. That’s certainly not what anyone expected from the study, and it contrasts sharply with earlier research from MIT, which reported productivity gains of around 26% when knowledge workers adopted AI tools3.

So, what explains these vastly different results, and how should developers be working with AI?

What the Studies Actually Measured

The MIT study was a large-scale experiment involving roughly 5,000 knowledge workers using GitHub’s Copilot, the first widely used AI coding assistant, over an 18 month period. It found an average productivity increase of 26% when measuring pull requests, code commits, builds, and build success rates.

In contrast, the recent METR study focused on 16 experienced open-source software developers over a 5 month period. These developers were allowed to work within projects they were already very familiar with, and the developers provided 246 specific issues that they believed AI would be useful for. Each issue was flagged either “AI allowed” or “AI disallowed” to set up the variable and control datasets. Each developer was allowed to use whatever AI assistant they preferred, and most used Cursor (an AI-enabled coding environment) with Claude 3.5 and 3.7, the latest versions of Anthropic’s large language model (LLM) at the time of the experiment. The 19% drop in productivity here was certainly unexpected.

Making Sense of the Differences

So what’s going on with this METR study? Why is it so outside what we would have expected?

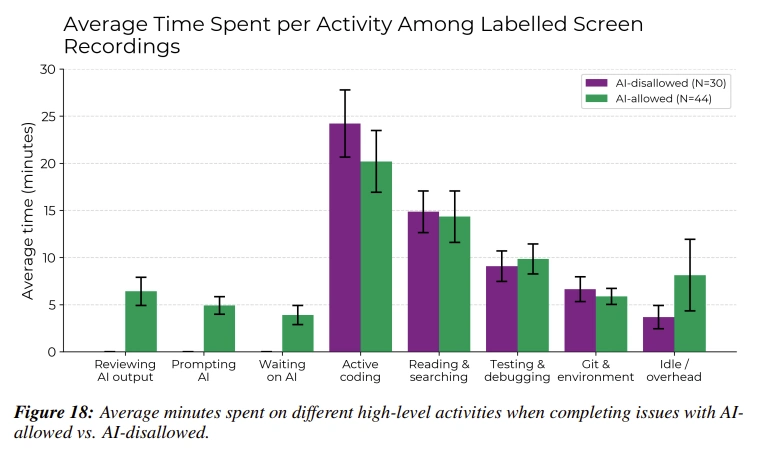

First, it’s important to see a breakdown of how time was spent in AI vs non-AI tasks:

As you can see, when AI was allowed, there were time savings in all the areas you would expect:

- Active coding

- Reading & searching

- Working with git (the leading code repository tool) and the coding environment

The productivity losses came from:

- Additional testing

- Working directly with the AI

- Idle time (scrolling social media, etc)

And that actually makes a lot of sense. There’s a lot we’re still learning about how to work with these tools:

- How to prompt efficiently

- Which models to use

- When to use AI (and when not to)

- How to handle context

- How to be productive while waiting for the AI to finish

The study seems to highlight that this is a new mode of working that includes a learning curve much more than calling AI’s usefulness into question.

When I’ve Found AI Helpful (and When I Haven’t)

In my experience, AI tools aren’t helpful or unhelpful across the board — it really depends on how they’re used.

Effective Uses

- Refactoring

- Debugging intricate, repetitive issues

- Simple, short-lived utility programs

- Brainstorming

For example, I recently encountered a CSS issue where inconsistent breakpoint settings (like max-width: 767px and min-width: 768px) caused layout breaks on specific screens. Fixing this manually across multiple files would have been tedious and time-consuming. Instead, I used Gemini 2.5 with the RooCode extension for VS Code (a popular code editor) to make all the changes for me while I was in a meeting. It saved me hours.

Less Effective Uses:

- Minor UI adjustments where visual judgment is key

- Small, known-issue fixes that experienced developers can resolve faster manually

In such scenarios, the noise of irrelevant AI suggestions can become counterproductive, distracting developers rather than assisting them.

Suggestions for AI in Coding

To get the most out of AI, it helps to be intentional.

Provide Comprehensive Context

Ensure your AI tool has deep knowledge of your project’s structure and codebase, not just isolated snippets. This means if you’ve solely been pasting code into ChatGPT or Gemini or Claude, you’re not getting the full benefit of these tools. You should be using Cursor or Claude Code (or RooCode, like me) – your AI of choice needs access to your entire project.

Documentation isn’t just for developers – you can use it to provide context for your AI, especially when working in exceptionally large codebases. There are all kinds of tools and strategies available to help you figure out the best way to set this up.

Craft Thoughtful Prompts

Clearly outline your goals, constraints, and architectural nuances when prompting AI. Ask it what its capabilities are. Ask if there’s a better model for what you’re trying to achieve. The more you work with it, the better you’ll get at this. Also, the How I AI podcast is a great resource for tips in this area.

Check the Output

Treat AI’s contributions just as you would those of any other developer. Make sure you understand the code it created, and don’t just trust that everything was executed well.

Tools I’ve Found Useful

I’ve personally found Cursor to be an exceptionally good AI-enabled code editor. I used Claude 3.7 when I worked with it (only because 4 wasn’t out yet). But I began using the RooCode extension in VS Code, because the experience was very close to Cursor’s. And RooCode (like Cursor) allows you to use whichever AI model you prefer to work with, so I switched to Gemini 2.5 since I already had a subscription. I feel like the results have been very consistent. I haven’t tried Claude Code or Gemini CLI yet, but I’ve heard very good things and plan to check them out soon.

These frontier models have become exceptionally good, and it seems the only wrong choice right now is not using any of them at all.

Final Thoughts

Overall, these studies remind me that using AI effectively is more about careful integration and experimentation rather than expecting it to solve everything automatically. When approached thoughtfully, I’ve found AI tools to be a major productivity boost, not a distraction.

1 "Study Finds AI Tools Made Open Source Software Developers 19 Percent Slower," Ars Technica, July 14, 2025, https://arstechnica.com/ai/2025/07/study-finds-ai-tools-made-open-source-software-developers-19-percent-slower/;

"AI Slows Down Some Experienced Software Developers, Study Finds," Reuters, July 10, 2025, https://www.reuters.com/business/ai-slows-down-some-experienced-software-developers-study-finds-2025-07-10/;

"AI Coding Productivity Study," Axios, July 15, 2025, https://www.axios.com/2025/07/15/ai-coding-productivity-study.

2 METR, "Early 2025 AI Experienced OS Dev Study," July 10, 2025, https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/.

3 Shakked Noy and Whitney Zhang, Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence, MIT Department of Economics, April 2023, https://economics.mit.edu/sites/default/files/inline-files/draft_copilot_experiments.pdf.